Demo Mistral Chat

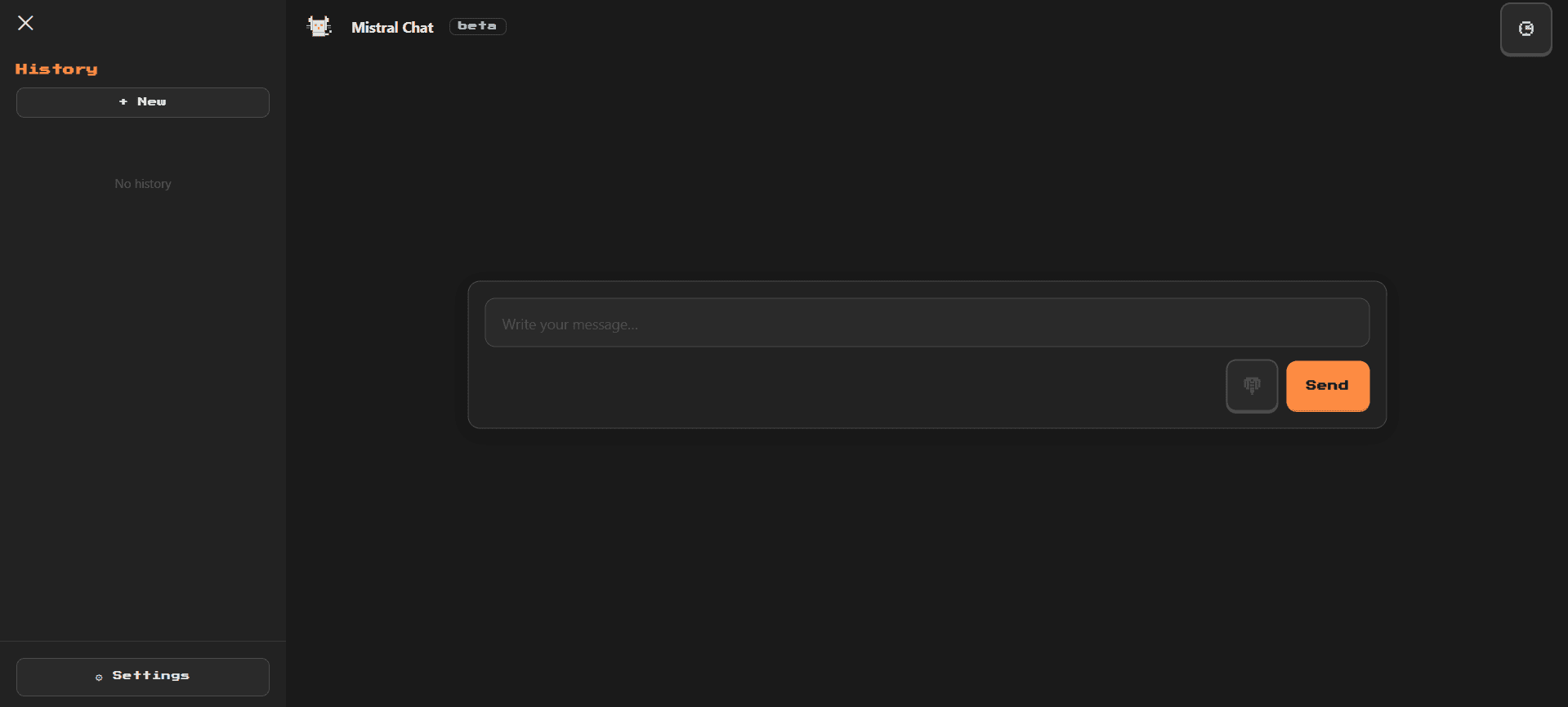

LiveChat interface with Mistral AI and streaming

About the project

A modern chat web application that demonstrates Mistral AI capabilities. Built with Next.js 14 and deployed on Vercel Edge, it implements real-time response streaming for a fluid and responsive user experience. The project explores best practices for integrating LLMs into web applications, including context handling, prompt optimization, and efficient conversation state management.

Technologies

Next.jsMistral AITypeScriptEdge FunctionsStreaming

Features

- Real-time response streaming

- Modern and responsive chat interface

- Conversation context handling

- Edge Functions for minimal latency

- Prompt optimization for better responses

- State management with React hooks

- Minimalist dark mode design

- Automatic deployment with Vercel

Technical challenges

- Implementation of SSE (Server-Sent Events) streaming

- Correct context handling between multiple messages

- Performance optimization in Edge Runtime

- Error handling and automatic reconnection

- Balance between latency and response quality

Learnings

- LLM integration with modern APIs

- Serverless architecture with Edge Functions

- Streaming patterns in web applications

- Prompt optimization for LLMs

- App deployment and monitoring on Vercel Edge

Screenshots

Vista 1