Llama Fine-tuning for Spanish Lyrics

ArchivedFine-tuning Llama 3.3 and 3.1 models for music lyrics generation

About the project

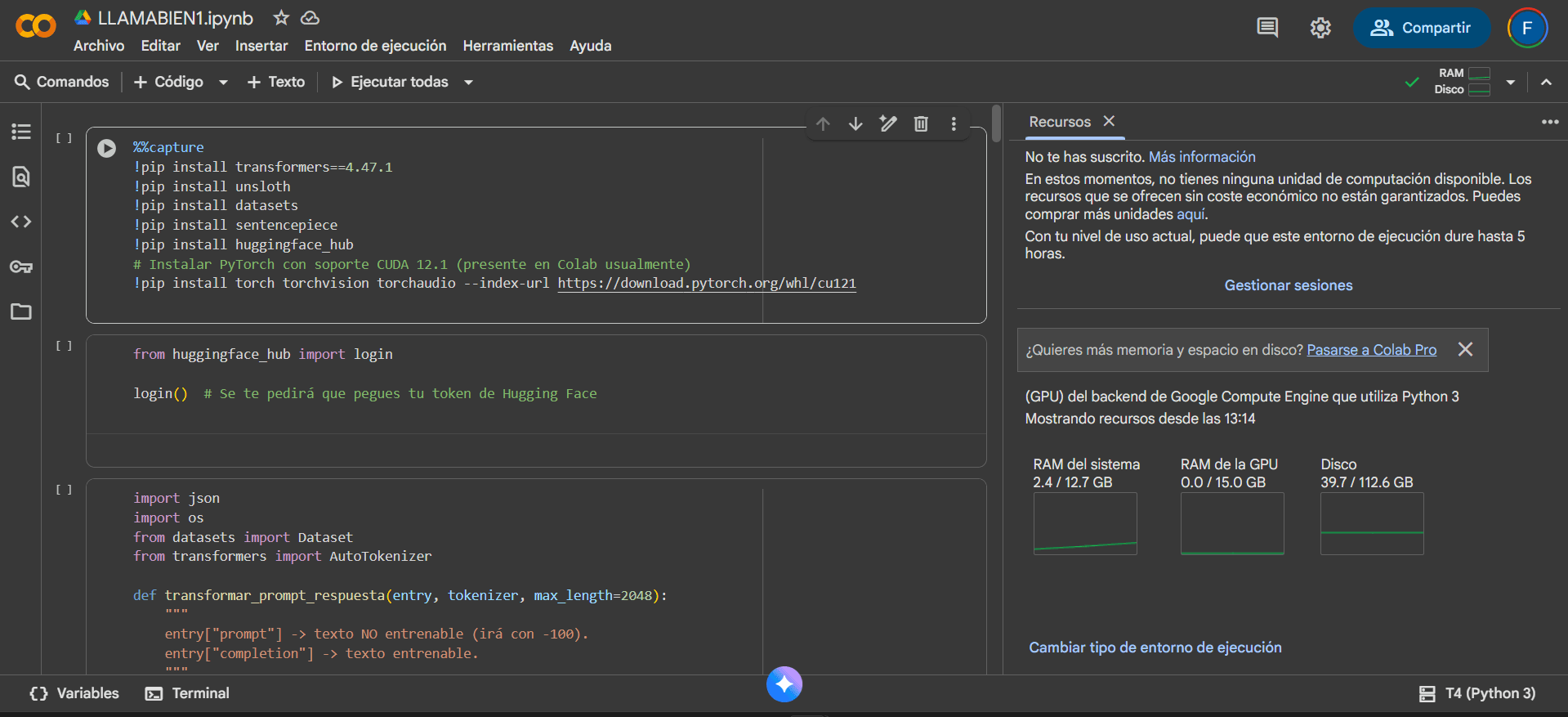

Fine-tuning project of Llama models (3.3 1B/3B and 3.1 8B) to produce coherent Spanish song lyrics with consistent structure, rhyme, and theme. Optimized to balance quality and cost through advanced techniques like QLoRA 4-bit and Unsloth. The project includes a complete custom scraping pipeline developed with Playwright to create a robust dataset of +10,000 prompt-completion examples, intelligent tokenization system with masking, and efficient training architecture with A100 achieving high-quality results with ~24.3M trainable parameters.

Technologies

PyTorchLlamaFine-tuningQLoRAHugging FaceUnslothNLPTransformersbitsandbytesPEFT

Features

- Fine-tuning Llama 3.3 (1B/3B) and 3.1 (8B) with QLoRA 4-bit

- Custom dataset of +10,000 prompt-completion examples

- Autonomous scraping pipeline with Playwright for capture and parsing

- Intelligent tokenization with masking (labels=-100 in prompts)

- Optimized training with Unsloth (~2× faster)

- A100 configuration: 5 epochs, 3,200 steps, effective batch size 8

- ~24.3M trainable parameters with LoRA adapters (r=16)

- Gradient checkpointing and memory optimizations

- Thematic adherence, repetition, and length evaluations

- Optimized balance between quality and inference cost

Technical challenges

- Development of robust scraping and text normalization pipeline

- Implementation of correct masking for efficient training

- Hyperparameter optimization (rank, lr, batch, max_seq_length)

- Handling VRAM limitations with quantization techniques

- Balance between generative quality and computational cost

- Qualitative evaluation of semantic and structural coherence

Learnings

- Advanced fine-tuning techniques with limited resources

- QLoRA architecture and memory optimizations (4-bit)

- Unsloth integration for training acceleration

- Data pipeline design for text generation

- Evaluation and tuning of Spanish generative models

- Trade-offs between model size, quality, and latency

Screenshots

Training

Results

Personal experimentation project with LLM fine-tuning. Training performed with A100 GPU.